As back-to-school season starts, I am reminded of high school. I was a good math and science student, and always in the most advanced classes. However, I don’t remember having any passion for it, and I dreaded taking tests. One of my big complaints was how abstract it all was - it was as if the triangles of geometry and trigonometry and the frictionless flat surfaces of physics existed in an alternative universe, and the only way to solve the problems was to memorize every rule and what scenario it should be applied to. I couldn’t see how something like this could ever be applied to the real world, and my teachers never had a good answer beyond “it is valuable to learn this for the sake of learning” or “scientists use math in the lab”. While both of those things are true, this did not inspire 16 year old me or help me to fully understand the concepts.

This is perhaps naive of me, but I was shocked when I started taking engineering classes in college and learned that my high school math and science classes were the basis for all the technology that we use on a regular basis, from the buildings we live to the phones we increasingly rely on. Even more shockingly, I found that grounding these topics in the real world make it easier to visualize and solve math problems. Trigonometry is complex and hard to understand, but trying to figure out how far to extend a ladder to get to the second-floor window is a simple problem. Newtonian physics is exhausting and awful, but I can easily determine when to change the gears on my bike. As I progressed in my career and started working in the computer vision realm, I realized that one hot-button tech topic fully encapsulates the real-world applications all the STEM classes I took in high school - self-driving cars, specifically, the computer vision pipeline needed for the car to navigate and avoid obstacles.

This article marks the first in my first-ever series. The goal of the series is to walk through the computer vision pipeline of a self-driving car to discuss how different aspects relate to different high school math and science classes. The rest of this article is going to give an overview of what “computer vision” means and how to think about a camera in the same way a computer vision engineer thinks about it. These articles are going to include equations and diagrams that you may remember negatively, but I am not going to dwell on them or even use them”. Instead, I want to help develop an intuition for how and why they work. If you are interested in fully understanding what the equations or diagrams mean, I recommend focusing on one part at a time until you can see the whole picture.

What is computer vision?

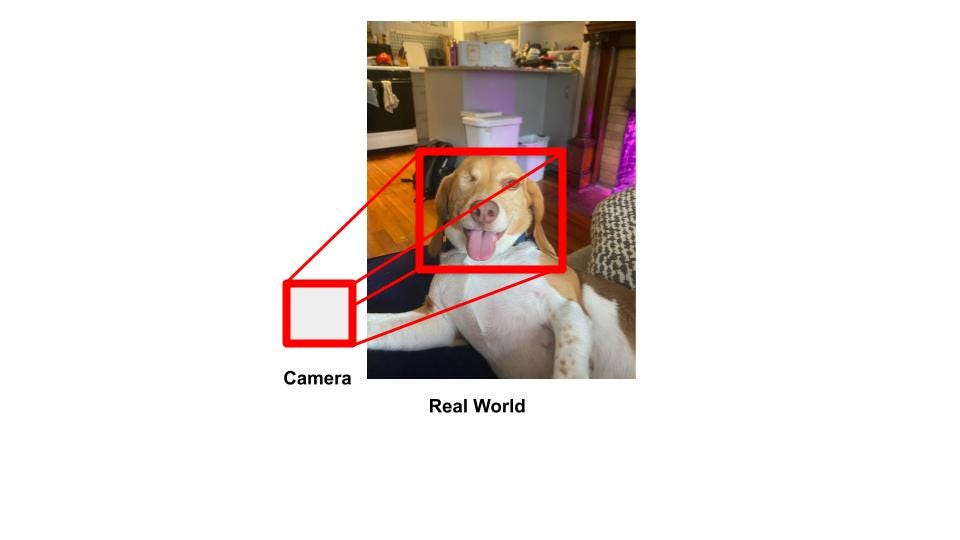

Computer vision is the field of study aims to program computers to pull out meaningful information from pictures and videos. In a sentence, it helps the computer “see” in the same way a human does. In order to understand how that works, you need to have a high-level understanding of what a camera is. A camera is a sensor that creates an image when it registers reflects light that has interacted with physical objects. That sounds complicated, but if you have ever taken a photo you already know this, and it can be easily visualized:

What does it mean that the camera “registers reflected light”? In simple terms, the camera uses optics to “see” the reflected light. This reflected light is broken up into smaller parts called pixels for storage purposes. Pixels encode the reflected light in a way that the camera can understand. Usually for color cameras this is in RGB (red-blue-green) format, where each pixel stores how much red, green, and blue would be necessary to re-create that bit of light. This is usually in a range of 0-255, because it’s most efficient to store things in powers of two. The amount of pixels in the image is called its resolution, and this is usually represented as the height and width of the pixels. For example, in the picture below, the resolution would be 5 x 6, and the top right pixel could be re-constructed with values of (Red: 133, Green: 27, Blue: 200)

Another important factor to consider is how much of the real world you are capturing. This is called the field of view (FOV) of the camera, and in the computer vision world this is represented in degrees, like in the diagram below:

A larger field of view can capture more area, but it may look distorted. Other factors of the camera that are not relevant here, such as the focal length and polarization, can affect how realistic the images look to humans.

The final aspect of a camera that we care about is dependent on the field of view and the resolution, but also how far away the camera is from whatever it is imaging. It is called ground square distance (GSD), and it measures how far away the centers of each pixel are in the real world. If we look at our example picture again, we can see that my dog Penny is closer to the camera than the kitchen in the background, so the ground square distance for the pixels representing her would be smaller than the ground square distance for the pixels representing the kitchen.

Computer vision experts care about these specific qualities because they are what allow us to write programs that extract information from the image. If we know what colors and pixels represent Penny, we can train a neural network to recognize her. If we know how much ground is covered in a given pixel, we can figure out the size of an object. It is geometry, trigonometry, and physics that allow us to do these calculations.

Side Note

In most research fields, it is standard practice to have default examples that are used across publications and code libraries. This is particularly useful for computer science applications because then you can get direct comparisons between algorithms. Since the 1970s, the default image for computer image applications has been “Lena”, a cropped image of a Playboy model. The story, documented in more detail in the Atlantic, is that researchers at the University of Southern California’s Signal and Image Processing lab were looking for an image of a human face, and one happened to have a copy of the magazine on hand that featured Swedish model Lena Söderberg. They ripped out the top third of the centerfold to test their algorithm, and clearly preferred her to the human stock images available at the time because they uploaded the photo to their world-renowned image processing library on early precursors to the the Internet, and it proliferated throughout the field. Even today, popular computer vision packages such as OpenCV still use Lena in their documentation. Lena or Playboy have never been paid for this usage, and as far as I can tell it was years before anyone told her or asked her what she thought. I am not interested in discussing the societal norms of the 1970s or if it was an appropriate decision at the time. However, I believe that the time has come to retire Lena. At a time when the tech world in particular is struggling to nurture and retain female talent and the world at large is still struggling with basic gender equality, it is not acceptable to use photos of nude Playboy models as the basis of such an important field. Using her shows that women are only welcome in the field of computer vision if they are being objectified and is another micro-aggression that female computer scientists have to deal with constantly. Even the debate about if Lena should still be used erases women’s feelings - the Atlantic article linked above quotes several researchers who don’t care about women’s feelings but instead worry that using over-using her photo will cause technical problems. I would like to use my small platform to advocate for the retirement of Lena, and I will instead be using photos of my dog Penny for all examples going forward.